In part 2, we examined the different styles of service and data streaming APIs that help us to decompose complex problems into smaller APIs. We also looked at how data management is becoming part of the API landscape, specifically around data analytics supported by data lakes and streaming.

In part 3, we will look at how serverless and infrastructure/network APIs are moving the concerns of API design and management into the operations space.

API Style 6: Serverless and edge APIs

Serverless is a cloud computing model that moves the management of server resources to a cloud provider or third-party as-a-service vendor. Within serverless is a specialisation called to function as a service (FaaS), which enables developers to push a function-based unit of code for deployment on demand. When not in use, functions are scaled to zero, shifting the cost model from an always-on model common with virtual servers to a consumption-based model.

GitHub Actions and GitLab CI/CD are examples of hosted build and deployment pipelines that are triggered by a git push to a hosted repository. Rather than building out the automated processes yourself, these vendors offer hosted solutions that you define in a configuration file and are executed on their hardware. Similarly, Cloudflare Workers move dynamic behaviour from your server-side code to their edge servers located all around the world.

While most workloads exist within your cloud provider or data centre, serverless may be hosted both inside and outside your organisation. Therefore, API platforms are beginning to extend their surface area outside of the traditional deployment model. Not all APIs managed by your API program will exist within the traditional cloud or data centre infrastructure.

Function as a service is commonly used to build microservices that are either HTTP-based or message-driven. FaaS is also used to customise the behaviour of content delivery networks (CDNs) or to execute build pipelines within cloud-based code hosting services such as GitHub. Functions as a service offer a powerful solution for building and deploying APIs, event subscribers, and stream consumers. Because FaaS is designed to work both to offer an API and as a way to react to events, teams building solutions using FaaS may construct both API producers and consumers, blurring the lines between API producer and consumer teams that are perhaps clear today.

It is important to have a process for which your APIs built upon serverless platforms are defined and managed. APIs built upon serverless will still need to utilise proper design methodologies. Additionally, APIs built upon serverless platforms will need to be managed as any internal API would be. However, given the nature of serverless to exist outside the traditional data centre, API gateways may need to be placed outside of the organisation to protect these APIs properly. This will require a significant refocus from traditional API programs, as infrastructure concerns around serverless start to overlap with API program concerns.

API Style #7: Intent-based APIs for infrastructure and network automation

Just as serverless is moving the focus of API programs deeper into the operations space, infrastructure tools and network devices are forcing traditional operations staff from one-off scripting to automation through APIs. There is no greater example of this than Kubernetes, which is sometimes called a platform for platforms. The approach of the Kubernetes API is similar to those in network automation – using intent-based APIs to declare the desired state of your infrastructure.

Rather than making multiple requests to an API to execute a sequence of requests to achieve a desired state, intent-based APIs require that API consumers provide the desired end state. This is a dramatic shift from the more business capability APIs we mentioned in part 1 of this series, which often execute as a workflow using a sequence of steps. Instead, intent-based APIs defer to the internal workings of the platform or device to attempt to achieve the desired state.

Intent-based APIs have a few advantages:

- The developer doesn’t need to understand the current state and how to get there. Instead, they define the desired state while internal logic determines the necessary steps to get there

- Devices or infrastructure that are busy serving requests can opt to delay the execution of some or all steps necessary to achieve the desired state until an idle period in the future

- The changes may be rejected if the device or infrastructure cannot resolve a specific dependency or if the desired state changes would result in an invalid state, since the end state has been provided. This is unlike traditional API requests where only the information for that request is provided, without knowledge of the end state desired

- Changes may be reversed if a step fails, or at the very least return to a known previous checkpoint

- The current state of the infrastructure may be separated from the target state, making it easy to determine if the changes have been applied successfully, are still being applied, or failed to apply

For network automation, standards such as YANG exist to define the model definitions including model standards such as the IETF data modules and OpenConfig to unify across vendors and native models that support non-standard capabilities. Network transports such as NETCONF and RESTCONF support device configuration using YANG models, along with RPC-based communication when necessary. gRPC is also emerging as an alternative to the often ssh-based NETCONF. Additionally, network controllers may be used to manage a fleet of devices and coordinate the application of models across the infrastructure.

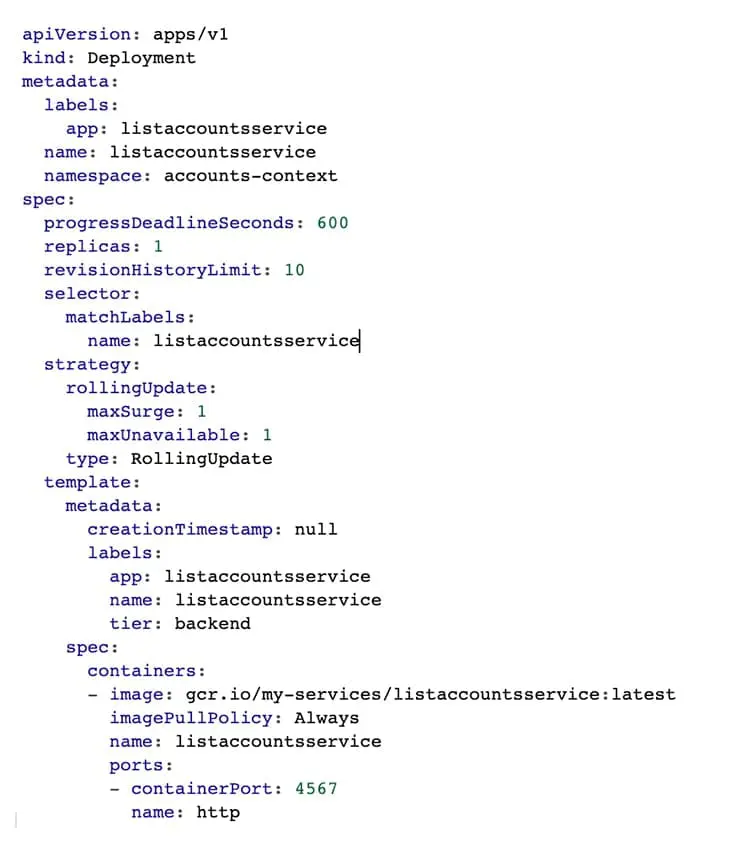

Kubernetes uses the concepts of Kubernetes Objects to define the record of intent of the desired changes. Kubernetes offers a REST-based API to its control plane to submit Kubernetes Objects for creation, modification, or deletion across a cluster, similar to how network controllers manage fleets of devices.

Intent-based networking is pushing API programs even further by requiring organisations to understand how to define network and infrastructure resources through API-based automation. Though the techniques may be different than more traditional IT systems, the need to monitor and manage their use remains the same.

Series conclusion

This series started with an overview of business capability, experience, and event-based APIs that are the foundation for any API program. We then looked at service-based APIs and streaming APIs, including how data management is being extended into our API program. Finally, we looked at serverless and edge APIs along with intent-based APIs to understand how the API program is being extended outside the organisation and even down into infrastructure operations.

These new API styles and concerns are being combined into new solutions that are addressing a demand from customers and partners. Organisations are shifting their mindset from providing APIs that solve a single problem, to an API platform that empowers their developers, partners, and customers to automate integration through a combination of API interaction styles. This means that your API program will need to begin looking for new ways to make these wide varieties of API types and styles discoverable for everyone within the organisation. It also means that those in the role of API governance will need to start considering how events, data streams, infrastructure, and network device automation fit into the overall API program for your organisation. This will require more than side-of-desk API design and coaching support. It will require a dedicated team to help support the organisation across all of its API automation initiatives.