In modern software development, particularly in cloud-native and microservices architectures, the combined use of Kubernetes and GitOps has become a widely adopted for deploying and managing applications. Kubernetes, an open source container orchestration platform, is renowned for its automation capabilities. Meanwhile, GitOps leverages Git’s power to revolutionise infrastructure management through a set of practices.

But how do they intertwine to facilitate API management in Kubernetes? That’s the question this article aims to answer. Additionally, you’ll learn about some of the challenges of Kubernetes deployment, how GitOps can help, and how to extend the Kubernetes API with the Tyk Operator. This Kubernetes extension brings the full lifecycle API management to Kubernetes. It enables you to manage your APIs as Kubernetes resources using Kubernetes’s CustomResourceDefinitions (CRDs).

Common challenges when deploying and maintaining an API in Kubernetes

Deploying an API over Kubernetes isn’t always a walk in the park. Developers can face several challenges when trying to maintain this solution. Take a look at some of these challenges:

Configuration issues

Network policies and service meshes enable you to configure which endpoints and APIs can communicate with each other. Correctly configuring your endpoints’ rules is crucial for access control and maintaining your cluster’s overall security posture.

An incorrect configuration could expose sensitive internal APIs to the public internet or allow more access to an API than intended. For instance, a misconfigured service could expose your database endpoint to the world or other applications on your cluster without access.

Additionally, configuring and routinely managing the network policies in your Kubernetes cluster can become increasingly complex as the number of APIs in your cluster grows.

Managing multiple clusters

If you have APIs set up across multiple clusters, you’ll need to put in extra effort to manage these clusters correctly if you want to have highly available and performant APIs. The task becomes exponentially more complex as the number of clusters increases; managing deployments, configurations, and synchronisations across multiple clusters is daunting, especially when each cluster may have different configurations and resources, as it can lead to inconsistent behaviour.

Automating deployments

Manual deployments are time-consuming and prone to errors. Moreover, if your APIs are frequently updated, automating your deployments is beneficial and necessary.

Automating deployments using continuous integration and continuous delivery (CI/CD) pipelines helps ensure seamless, error-free updates. However, setting up automated pipelines that work well with Kubernetes’s declarative nature can be complex, especially if you’re a beginner. It often requires a deep understanding of Kubernetes and CI/CD concepts. This complexity arises from the variety of tools available and the steep learning curve associated with Kubernetes’s native automation tools.

Handling rollbacks

When one of your APIs goes down, or you encounter a bug, it’s common practice to roll back to a previous stable version. However, manual rollbacks can be error-prone, increasing the risk of further downtime.

Kubernetes does not natively provide an easy way to roll back deployments, especially in complex, multiservice scenarios. Automating rollbacks is also challenging, as it requires careful orchestration to ensure that all services roll back to the correct versions while maintaining interdependencies.

Managing configuration drift

As your team continues to work on different aspects of your APIs, untracked or unmanaged changes can cause the API’s code across various environments to drift apart. This divergence can lead to unexpected behaviour and complicate understanding the system’s current state. For instance, a change made in the staging environment that wasn’t replicated in the production environment can lead to differences in API behaviour between these environments.

In Kubernetes, managing configurations through ConfigMaps and secrets can get complicated, leading to drifts that cause unpredictable behaviour, application errors, or security vulnerabilities. In addition, detecting and resolving configuration drifts are significant challenges in Kubernetes environments because of the lack of synchronisation across environments, the complexity of the Kubernetes environments, and manual changes.

Protecting APIs from unauthorised modification

APIs form the core of most modern applications, and any unauthorised change can lead to severe consequences. This means your APIs’ configurations and definitions need to be securely managed. Typically, these configurations are stored in a Git repository as the single source of truth. However, ensuring that all changes go through a proper review process, automated testing, and auditing before being deployed to Kubernetes can be challenging, requiring a well-defined process and tooling that integrates Git with Kubernetes deployments.

How GitOps helps with Kubernetes API deployment and management

GitOps, a term coined by Weaveworks, implements continuous deployment for cloud-native applications. It leverages Git as a single source of truth for declarative infrastructure and applications. But how does it help in managing Kubernetes API deployments? The following is a breakdown:

Simplified network and access management

GitOps practices rely on declarative specifications stored in Git. By keeping your network and access rules as code in Git, you can simplify management and reduce the chance of human error. Any change can be reviewed and audited before it’s applied, ensuring only authorised network changes are made. In addition, it provides transparency, repeatability, and easy rollbacks, if necessary.

Streamlined multiple cluster management

GitOps helps manage multiple clusters by storing all cluster configurations in Git. It enables you to replicate configurations across clusters, maintain consistency, and simplify cluster management. Any changes are automatically synchronised across clusters, reducing manual intervention and errors.

Automated deployments

GitOps naturally fits into the continuous integration, continuous deployment (CI/CD) paradigm. Any changes to your API (or infrastructure) are pushed to a Git repository, triggering automated pipelines that test and deploy your changes to the Kubernetes environment.

GitOps ensures that your deployments are repeatable, auditable, fast, and less prone to human error.

Effective rollbacks

To handle rollbacks effectively, GitOps relies on Git’s inherent version control capabilities. Suppose an API fails or a bug is discovered. In that case, you can quickly revert to a previous stable version stored in Git, which can be done automatically by your GitOps tools, reducing the risk of error during critical incidents.

Reduced configuration drift

GitOps helps prevent configuration drift by maintaining your system’s desired state in Git. The system automatically detects and corrects any discrepancies between your cluster’s actual and desired state, ensuring that your API configurations remain consistent across different environments.

Enhanced security

GitOps enhances the security of your APIs by keeping all configurations in Git, providing a history of changes, and ensuring that any modifications are reviewed and audited. With automated deployments, developers need less direct access to Kubernetes clusters, reducing the potential attack surface.

How the Tyk Operator extends Kubernetes-based APIs to support full lifecycle API management

Tyk is a powerful, open source API gateway and management platform that offers numerous features to help you manage and scale your APIs. The Tyk API management platform includes the Tyk Gateway, a lightweight yet robust gateway that offers rate limiting, request/response transformations, and authentication.

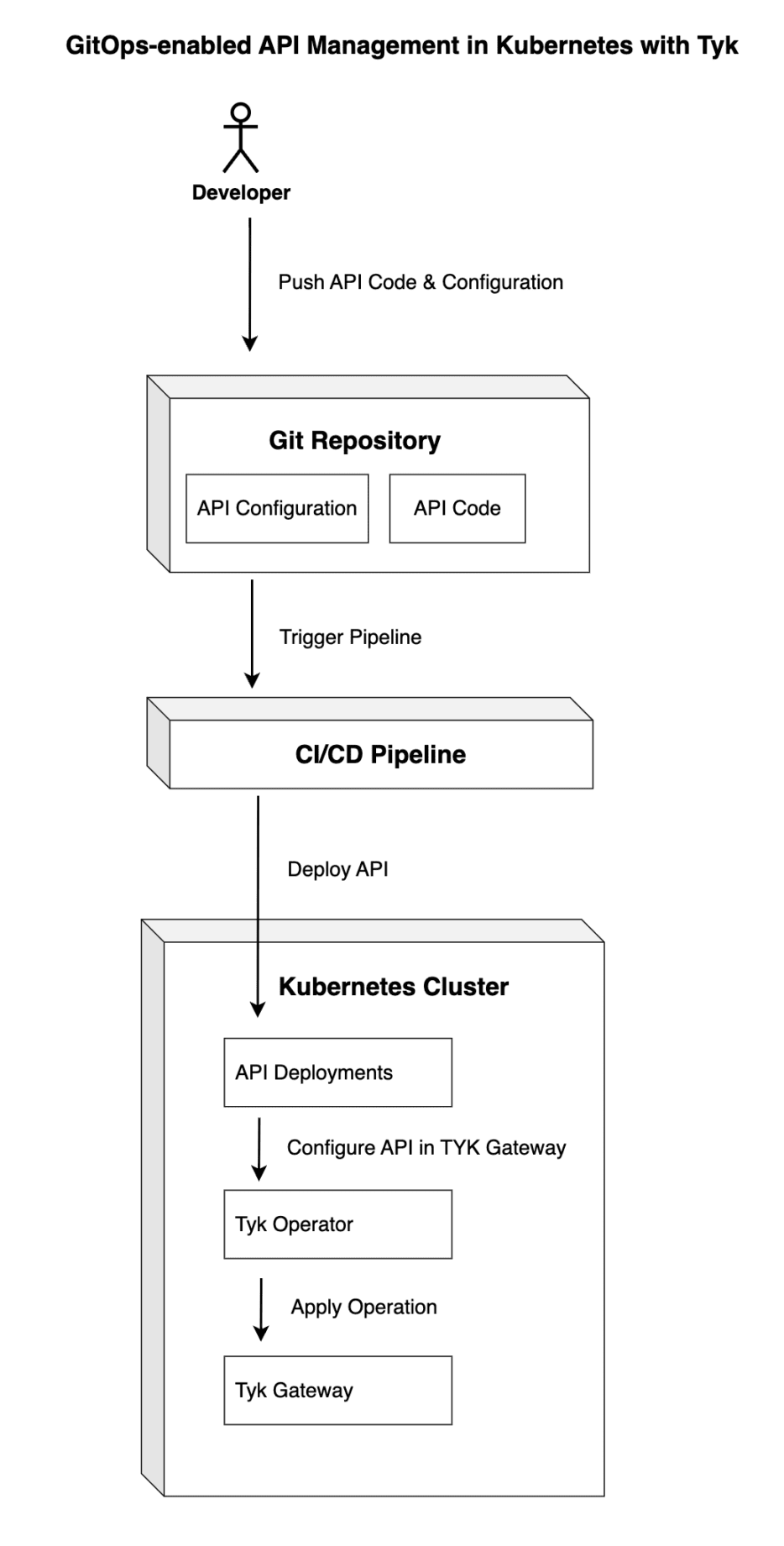

Additionally, to fully leverage the capabilities of Kubernetes, Tyk provides the Tyk Operator. This operator is a built-in Kubernetes extension that allows you to manage your APIs as Kubernetes resources. It acts as a bridge between the Kubernetes system and the Tyk Gateway, allowing Kubernetes to control the API configuration in Tyk directly:

GitOps-enabled API Management in Kubernetes with Tyk courtesy of Daniel Olaogun

This diagram illustrates the flow from a developer pushing API code and configurations to a Git repository, which triggers a CI/CD pipeline to deploy the API into a Kubernetes cluster. The Tyk Operator configures the API in the Tyk Gateway based on the deployment.

Features of the Tyk Operator

Some of the key features of the Tyk Operator include the following:

Built-in Kubernetes declarative API management

The Tyk Operator brings the full power of Tyk to Kubernetes. It leverages Kubernetes’s CRDs to enable declarative API management. This approach aligns perfectly with GitOps principles, ensuring your API configurations can be version-controlled, audited, and automatically deployed.

Flexibility

Whether setting up API management for the first time or migrating existing APIs to Kubernetes, the Tyk Operator offers flexibility in configuring your APIs. It provides various configuration options to meet the specific needs of your application and infrastructure. For instance, If you need to implement a canary release for a new version of your service, you can configure the traffic splitting rules in your Tyk API configuration. The Tyk Operator will apply these settings in the Tyk Gateway, directing a specified percentage of traffic to the new version of your service.

Positive developer experience

With its straightforward configuration and seamless integration with Kubernetes, developers can focus more on building their APIs and less on managing them, simplifying the overall management of APIs. Additionally, with its detailed documentation, active community support, and intuitive design, Tyk offers a seamless experience for developers.

Why you need the Tyk Operator for Kubernetes-based API management

The Tyk Operator brings numerous benefits to Kubernetes-based API management, including the following:

Easy traffic and access management

With Tyk Operator, you can manage your API’s traffic and access policies directly from Kubernetes. This includes configuring rate limiting and access controls, as well as routing rules as part of your Kubernetes resources.

For instance, if you have an API with high traffic volume, you can implement policies using the Tyk Operator to prevent overuse and protect the API

Enhanced security

Tyk comes with built-in support for various security standards, including OAuth2, JSON Web Token (JWT), basic authentication, and mutual TLS. Thanks to these capabilities, your team can focus on building the API’s functionality without worrying about implementing security protocols.

Proxying, logging, and monitoring capabilities

The Tyk Operator integrates with Tyk’s robust proxying, logging, and monitoring tools. These provide detailed insights into your API’s performance and usage, helping you identify and resolve issues quickly.

When an API has performance issues, you can enable detailed logging and monitoring for that API right from your Kubernetes configuration using the Tyk operator. This helps you to gain valuable insights into the API’s behaviour, request/response times, and error rates, which can be instrumental in troubleshooting the issue.

Scalability

As a built-in Kubernetes tool, the Tyk Operator can leverage Kubernetes’s built-in scaling capabilities. This means your API management infrastructure can scale seamlessly with your API workloads, ensuring optimal performance even as your needs grow. If your API usage grows rapidly, the Tyk Operator can automatically scale in response to increased demand. For example, suppose your e-commerce application experiences a surge during the holiday season. In that case, your API gateway can automatically scale to handle the increased traffic, ensuring a smooth experience for your users.

By extending Kubernetes with the Tyk Operator, you can bring the full power of Tyk to your Kubernetes-based APIs, achieving robust, scalable, and easy-to-manage API lifecycles.

Conclusion

In this article, you learned about managing APIs in Kubernetes and explored how GitOps principles can address those challenges. You were also introduced to Tyk and Tyk Operator and how it extends Kubernetes to provide a robust, flexible, and developer-friendly approach to API management.

Adopting GitOps for API deployment and management and using tools like the Tyk Operator can create a more efficient, reliable, and scalable infrastructure for your APIs. These practices and tools improve your day-to-day operations and lay a solid foundation for your team’s growth and future challenges.

Whether you’re just starting your journey with Kubernetes and GitOps or looking to optimise your current practices, remember these are iterative processes. Keep learning, experimenting, and improving. With the right tools and practices, you can tackle any challenge that comes your way.

If you want to see how the Tyk Operator can enhance your Kubernetes-based API management, why not try it out for free? Tyk has a detailed demo that walks you through setting up and using the Tyk Operator in a Kubernetes environment. It’s a fantastic way to see firsthand the power and flexibility that Tyk brings to Kubernetes API management.

Thank you for joining us on this journey. We look forward to seeing how you implement these practices and tools to improve your API management. Keep exploring, keep learning, and never stop improving.