Many enterprises are waking up to the potential of using Apache Kafka to handle high-throughput data streams. However, exposing those streams isn’t always easy. Suddenly, you’re dealing with a flood of security and governance headaches, protocol complexity, discoverability issues and more.

Kafka’s native access control lists (ACLs) can be complex, especially at scale, making security and governance a pain. There’s also the fact that many users can’t – or won’t – install specialized Kafka clients (in fairness, if they only need quick access via HTTP or WebSocket, there’s hardly much motivation for them to do so).

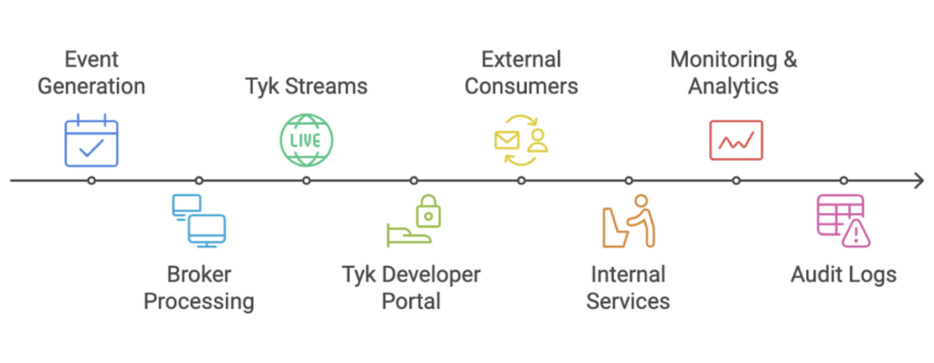

Pause. Breathe. The solution lies in combining Apache Kafka with Tyk Streams and a developer portal. Doing so means you can open up Kafka data securely and efficiently, cutting through complexity for both internal teams and external consumers.

Using Tyk Streams with a developer portal in this way not only addresses the issues noted above, it also aids discoverability, ensuring developers can find and connect to your event streams with ease.

Read on to explore all you need to know about exposing your event streams, minus the pain.

The rise of async APIs and webhooks

Surging real-time and event-driven use cases mean that async APIs – including WebSockets, Server-Sent Events (SSE) and webhooks – are increasingly critical to a growing range of global enterprises. The ability to react to data as it happens, rather than polling for updates, is something of a game changer, and one where there’s still plenty of potential for further developments, use cases and innovations.

Why the popularity? Well, low latency data, with applications receiving events in real-time without endless polling, is certainly part of the appeal. In addition, protocols such as SSE and WebSockets are widely supported, requiring minimal setup. The fact that webhook integrations mean you can push notifications to external systems instantly when an event matches certain criteria is the icing on the cake.

Add to this the fact that Kafka excels at handling large volumes of data in a publish-subscribe model, and the scene is set. However, not everyone wants (or can) run a Kafka consumer just to get the data. Exposing Kafka streams via HTTP, SSE or WebSockets can drastically lower the barrier to entry, making it easier for developers to plug into real-time data flows using standard protocols.

The challenges of exposing Kafka publicly

If Kafka is already a robust platform, why not just let everyone connect? Well, plenty of reasons, actually.

First, there’s the security and access control headache. Managing Kafka’s ACLs for dozens – or hundreds! – of developers is cumbersome, to say the least. You need fine-grained controls to ensure only authorized users or services can subscribe to specific topics.

Then there’s the issue of protocol complexity. Kafka’s binary protocol isn’t web-friendly. It requires external consumers to install specialized libraries, each with unique versioning and dependency quirks. Painful.

Another challenge relates to data privacy and compliance. You might want to filter events based on user roles or PII constraints, while compliance auditors will certainly want an audit trail. Who can you tell them who’s accessing which stream?

Finally, there’s the challenge of discoverability. Even if you make Kafka accessible, how do you document the asynchronous interfaces? And where do developers find these new “endpoints” for real-time data?

At the risk of blowing our own trumpet, enter Tyk Streams…

Tyk Streams: Bridging Kafka and developer portals

Tyk Streams addresses these pain points by providing a unified layer that translates Kafka (and other event brokers) into standard API protocols. It does so under Tyk’s well-established governance model, offering consistent security, rate limiting and telemetry. Everything you need to keep your internal teams, your consumers and your compliance auditors happy.

There are three key ways in which Tyk Streams does this.

Simplified onboarding via a developer portal

If you have a developer portal, where internal teams or external partners can discover your REST APIs, Tyk Streams enables you to list async APIs alongside those traditional endpoints. You can display the technical details of your streams in a standardized format using AsyncAPI specifications. Developers can then generate clients or simply read the docs to get started. They can request API keys or tokens and get immediate, self-service access to your real-time data flows.

If you’re using Tyk’s Developer Portal, you can also enjoy the benefits of automated cataloging. The portal will automatically organize your streams, including any relevant documentation or sample payloads.

Turning Kafka into webhooks, SSE or WebSockets

Another feature designed to make your life easier is the Tyk Dashboard. Using this, you can configure Tyk Streams to listen to Kafka events and then forward them to:

- Webhook endpoints: external services that process incoming HTTP requests whenever new events arrive.

- SSE or WebSocket endpoints: real-time connections that push data out to subscribers as soon as new events hit Kafka.

- HTTP GET endpoints: for simpler scenarios where occasional snapshots of Kafka data is enough.

Within Tyk Dashboard, you simply pick your input (Kafka) and output (HTTP, WS, SSE, etc.), apply policies (like authentication, rate limiting or transformations), and then publish it via the developer portal. It’s all designed with the same simple, user-friendly ethos that you’ll find in all elements of our API management solution.

Centralized security and governance

Tyk also brings simplicity and order to your security and governance. You can easily implement unified authentication and authorization using OAuth2, JWT tokens or standard API keys – whatever you prefer. No more messing with multiple ACL layers. You can also protect your event broker from spikes by controlling consumption rates at the gateway, using rate limiting and throttling.

As we mentioned above, telemetry is another bonus when you use Tyk Streams. You can collect metrics on event throughput, error rates and usage patterns, enabling you to fine-tune resource allocation, troubleshoot faster and so much more.

How to publish Kafka streams securely

Ready to put the theory into practice? Let’s walk through exposing Kafka to a developer portal via Tyk Streams.

Step 1: Configure your Kafka connection

- Kafka topic setup: choose the topic(s) you want to expose publicly.

- Broker access: provide Tyk Streams with the credentials to connect securely to your Kafka cluster. If you’re using TLS or SASL, configure those settings.

Step 2: Create a “stream” in Tyk

- Input selection: in the Tyk Dashboard, select Kafka as the input.

- Output protocol: decide whether you want to expose this as a WebSocket, SSE, or HTTP-based endpoint.

- Apply policies:

- Security policy: e.g., API keys or OAuth.

- Rate limiting: limit the number of messages/second per consumer.

- Transformation rules: for advanced scenarios, convert messages from Avro to JSON, filter out sensitive fields, etc.

Step 3: Add AsyncAPI spec to the developer portal

- Write the async API document: describe your new stream—topics, message schemas, etc.

- Portal integration: upload or link your AsyncAPI spec in the Tyk developer portal.

- Documentation & examples: provide code snippets or quickstarts showing how to connect via SSE, WebSocket, or even webhooks.

Step 4: Launch and test

- Dev portal visibility: confirm that your new stream appears in the portal catalog alongside your REST APIs.

- Key management: ensure that requesting or renewing access keys is straightforward.

- Monitoring: use Tyk’s analytics to watch event throughput. Set up alerts for unusual activity or error patterns.

Use cases: Where secure Kafka exposure shines

The use cases of event-driven architectures continue to expand, as different verticals push the boundaries simultaneously to see what more they can achieve.

In the financial services industry, for example, financial institutions can now allow partners to subscribe to real-time transaction notifications without implementing custom Kafka clients. In manufacturing, hardware manufacturers can feed data from sensors into Kafka, then let certain customers subscribe to relevant streams via SSE or WebSocket.

Enterprises are finding plenty of internal use cases for exposing Kafka streams as well. For example, internal teams building microservices can quickly tap into real-time events by visiting the developer portal and subscribing to the relevant async endpoints.

The benefits of exposing Kafka with Tyk Streams

Let’s round up some of the benefits you stand to gain when you expose Kafka with Tyk Streams:

- Faster time to market: No more building and maintaining custom bridging microservices. Tyk Streams handles protocol translation and event brokering out of the box.

- Better developer experience: With AsyncAPI specs published in the portal, developers see real-time streams in the same place they find your REST APIs for consistency and improved onboarding.

- Strong security posture: Centralized policies, unified authentication, and robust rate limiting will keep your Kafka cluster safe from misuse.

- Scalability and governance: Tyk Streams leverages Kafka’s native features (consumer groups, persistence and so on), layering on Tyk’s proven governance at scale.

- Deeper observability: With Tyk’s analytics and tracing, you get end-to-end visibility across your event-driven ecosystem, supporting seamless troubleshooting and performance tuning.

Make the most of your event-driven APIs

Kafka is unrivaled when it comes to high-throughput, real-time data ingestion. Tyk Streams takes the grunt work out of exposing Kafka endpoints via a developer portal, so you can focus on delivering value, not wrestling with custom code and complex ACLs.

By making Kafka data easily accessible, secure and discoverable, you can empower internal teams to innovate faster, monetize event streams for external partners and unify your async and sync APIs in one place.

Tyk Streams is the bridge you need to do so. With webhooks, SSE, WebSockets and AsyncAPI specs all in your developer portal, you can unleash Kafka’s potential without compromising on governance, security or user-friendliness.

If you’re ready to take the next step, start by exploring our Tyk Streams documentation for configuration details and best practices and our quick start guide to see how to publish specs in your developer portal.

You can also reach out to our team for a guided approach to modernizing your event-driven architecture – we’re always happy to help.