Many observability, monitoring and logging tools rely on HTTP response codes as an indicator of quality – of what happened. But a simple approach of assuming 200 response codes are good, and codes in the 400s or 500s are bad, doesn’t account for the complexity of modern systems. Tyk asked Rob Dickinson, VP of Engineering at Graylog to explain…

Below, we cover:

- Why 200 (OK) response codes don’t mean everything is ok

- How this issue arose

- Examples of 200 not being ok

- What you can do to overcome this and reach status nirvana

You can watch the full webinar video via the link above or read on for our top takeaways.

200 is not always ok

In simple terms, the HTTP status 200 response code means that a server has successfully processed a request. Likewise, codes in the 400s and 500s mean a request has failed. On the surface, this seems like a really simple way to organise your metric, indicating which traffic is good and which isn’t. However, the reality is that with modern systems – and even older systems – things aren’t really this simple.

Can you trust 4xx and 5xx codes? Yes. These are usually fairly reliable indicators that there was a problem, even if they don’t always shed light on the exact class of the problem.

The real issue is that 200s may – or may not – be ok. If you assume they’re always ok, then you’re running the risk of undercounting threats and failures. And the amount of undercounting there can be pretty significant.

Why aren’t 200 response codes more reliable?

The gospel according to RFC 9110 is that the 200 (OK) response code indicates that the request has succeeded and that the content sent in the 200 response depends on the request method. Read on though, and there’s already some uncertainty about what the 200 code actually means. If it’s a GET request, for example, the response will be populated, while with a DELETE request there typically won’t be a response.

The bigger problem, however, is the word “success” – because that’s not clearly defined. And the more you look at the concept of success and the different cases that can align with the term, the greater the problem becomes.

From the perspective of the original standard, success basically means whatever the application defines as success; as long as the client and server agree on what success means, everything is fine from the standard’s perspective. However, this means it is more of a statement about the contract between the client and the server than a rigid definition that you can apply from an observability perspective.

Nervous yet? You should be! In an organisation of any size, you’re going to have a lot of different APIs. If you can’t normalise the definition of “success” across them, you’ve got a significant problem in terms of observability and of understanding the quality of those interactions.

When is 200 not ok?

As early as 1993, website providers started using custom error pages to respond to 404/500 errors with better context and direction about what to do next. Their goal was to avoid users who received unhandled error pages bouncing to another site.

Where this gets tricky is that, from a monitoring perspective, these custom error pages are served over the wire as 200s. It means you’re turning responses in the 400s and 500s into 200s from the perspective of your logging and monitoring tools. That’s clearly going to skew things. These 200 responses are definitely not ok from a monitoring and metrics perspective.

Where this comes into the web world is that public APIs, as we know and love them today, are built on web standards. And just like with the websites these APIs are replacing, developers can use HTTP codes as they see fit. They get to define success in terms of the response codes; there’s no canonical way to do so.

Even the underlying web frameworks may have different default behaviours about how those response codes are used and the appropriate client behaviour associated with that. GraphQL, for example, only returns 200 response codes by convention. Response codes aren’t used for error signalling – that’s in the payloads instead. And still this complies with the original language and intent of the RFC 9110 standard.

Another important thing to remember is that APIs use more response codes than websites. Websites stick to 200, 400, 404 and 500. APIs, though, might return 201 response codes or 204s. There’s a lot more variability in terms of what REST APIs will do and what they choose to signal. Not only that, but the misuse of HTTP codes can be difficult to correct, as you’re not just changing the behaviour of the server but the logic on all the clients – you’re changing the contract between client and server.

All the above means you can’t expect the use of HTTP response status codes to be consistent from a monitoring perspective. And if you can’t expect perfect consistency from your codes, you need your monitoring and observability stack to help you achieve that mapping so that you don’t undercount failures.

Examples of 200 not being ok

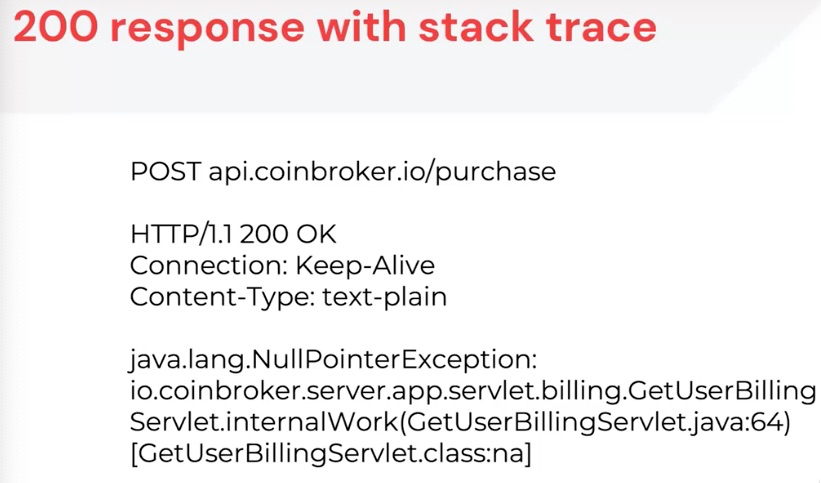

You can see how easy it is to undercount failures by considering the following example of a 200 response where the backend processing has failed. A failure can occur in the API or an upstream API that it’s dependent on. The consumer receives a stack trace that hasn’t been grabbed by an error handler. This isn’t just a failure, but also has the potential to leak information. Yet from a protocol perspective, it says 200 (OK).

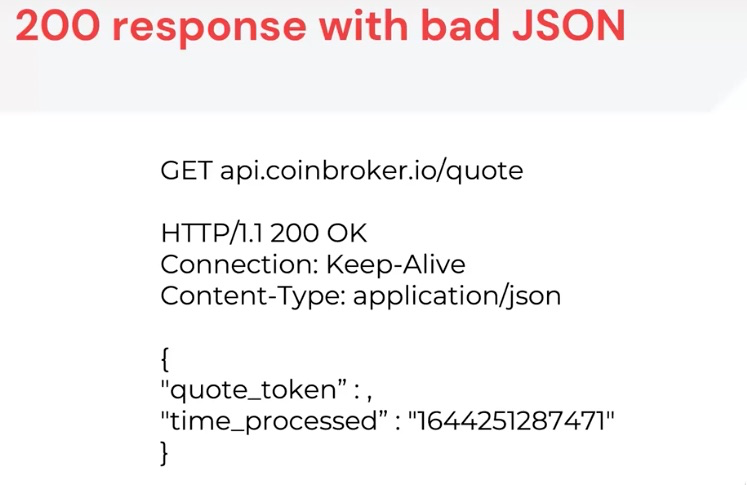

Another classic example where 200 isn’t ok is where there’s a significant breakdown of communication at the application layer versus the network layer. From a network perspective, the request was received and a response was sent. Yet that response could be invalid JSON.

Anything from a comma in the wrong place to an unescaped character could result in this, and no client is going to be able to parse this without getting an error from their JSON parser. This is going to cause downstream damage – but it’s also going to say 200 from a vertical perspective. After all, a response was sent. That’s not ok.

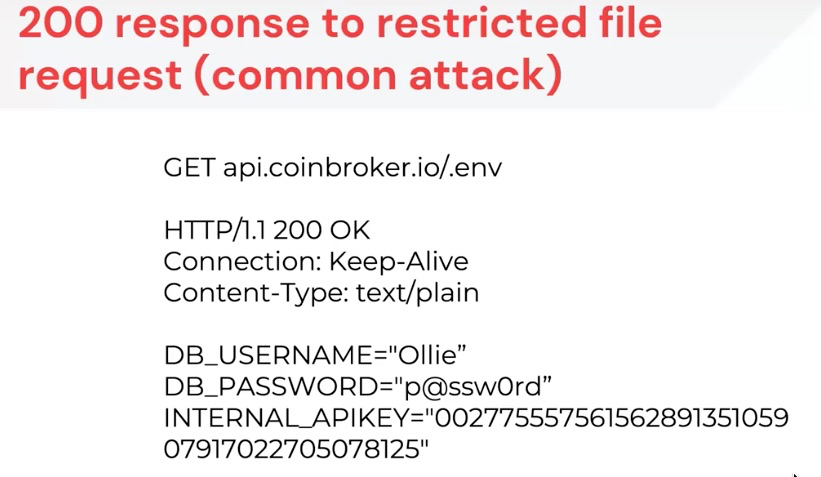

What about when an attacker requests a restricted file, such as a .env file? If the API gives the attacker that file, it could say 200 (OK) from a protocol perspective. But that’s absolutely not ok from a security perspective.

Another example of 200 not being ok is GraphQL and custom/in-house applications that use only 200 by convention.

How to gain a better understanding of response statuses

If you’re keen to reach response status nirvana, you’ll need to look beyond response codes. By all means, use them as a starting point. After all, any monitoring data is better than none.

After that, it’s time to create your own concept of response status, which you can use instead of places where you would otherwise be tempted to use response code. You can use your response status to bring in what success really means and overlay that the way the standard expected.

Next comes calculating that response status based on the full request and response together. Mix that with threat intel and knowledge about what the API specs are actually doing and you’ve got a truly reliable concept of response status – one that is independent of some of the ways that response codes can be prone to undercounting threats and failures.

API observability fundamentals

Now you’ve grown your understanding of how to reach response status nirvana for your APIs, why not dive into our on-demand API observability fundamentals programme to take your observability skills to the next level?