An introduction to the role of controller-runtime manager in Kubernetes operators.

Would you like to simplify the creation of controllers to manage resources efficiently in a Kubernetes environment? If so, then client-go is always an option – but our experience here at Tyk has shown that the controller-runtime package will likely be a better choice. It has become a fundamental tool for most Kubernetes controllers. Why? Read on to find out!

The increased adoption of projects like Kubebuilder or Operator SDK has facilitated the creation of Kubernetes operator projects. Such projects mean users must implement minimal requirements to start with Kubernetes controllers.

What are controllers and operators?

As a developer working on Kubernetes projects, I inevitably touch code pieces utilising controller runtime. Whenever I dive into the code base, I always learn something new about the underlying mechanism of Kubernetes. In this article, I will delve into the role of controller-runtime manager.

Controller-runtimehas emerged as the go-to package for building Kubernetes controllers. However, it is essential to understand what these controllers – or Kubernetes operators – are.

In Kubernetes, controllers observe resources, such as Deployments, in a control loop to ensure the cluster resources conform to the desired state specified in the resource specification (e.g., YAML files) 1.

On the other hand, according to Redhat, a Kubernetes operator is an application-specific controller 2. For instance, the Prometheus operator manages the lifecycle of a Prometheus instance in the cluster, including managing configurations and updating Kubernetes resources, such as ConfigMaps.

Both are fairly similar; they provide a control loop to ensure the current state meets the desired state.

The architecture of controllers

Since controllers are in charge of meeting the desired state of the resources in Kubernetes, they must be informed about the resource changes and perform certain operations if required. For this, controllers follow a unique architecture to:

- Observe the resources

- Inform any events (updating, deleting, adding) done on the resources

- Keep a local cache to decrease the load on the API server

- Keep a work queue to pick up events

- Run workers to perform reconciliation on resources picked up from the work queue

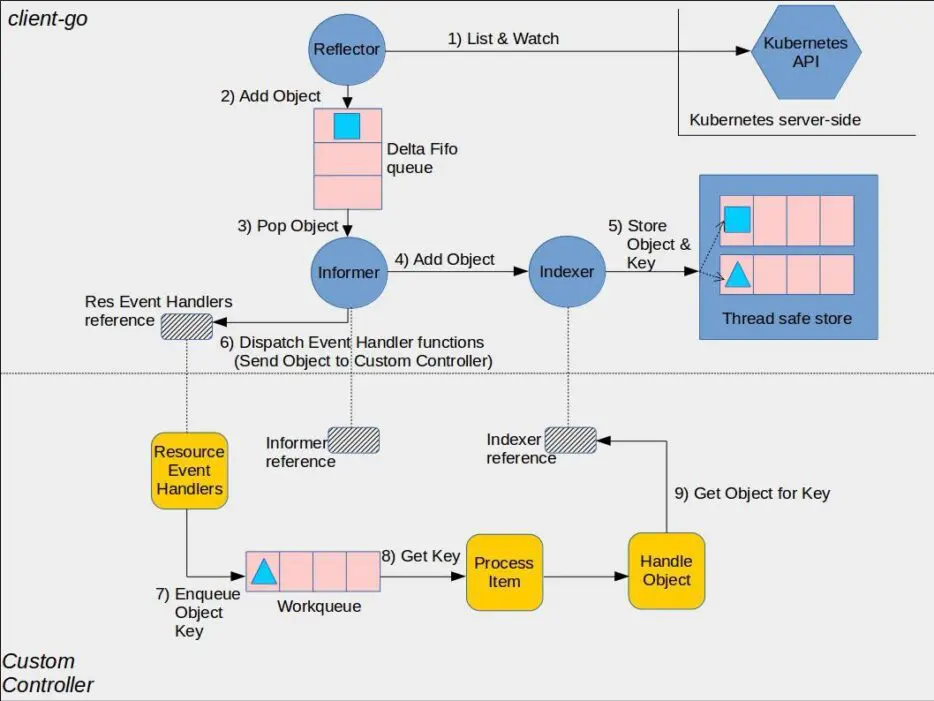

This architecture is pictured in client-go documentation:

Reference: client-go documentation.

Most end-users typically do not need to interact with the sections outlined in blue in the architecture. The controller-runtimeeffectively manages these elements. The subsequent section will explain these components in simple terms.

Simply put, controllers use:

- Cache to prevent sending each getter request to the API server

- Workqueue, which includes the key of the object that needs to be reconciled

- Workers to process items reconciliation.

Informer

Informers watch the Kubernetes API server to detect changes in resources. It keeps a local in-memory cache implementing the store interface, including the objects observed through the Kubernetes API. Then controllers and operators use this cache for all getter requests – GET and LIST – to prevent load on the Kubernetes API server. Moreover, informers invoke controllers by sending objects to the controllers (registering event handlers).

Informers leverage components like reflectors, queues and indexers, as shown in the diagram above.

Reflector

According to godocs:

Reflector watches a specified resource and causes all changes to be reflected in the given store.

The store is a cache – with two options: simple one and FIFO. The reflector pushes objects to the Delta FIFO queue.

By monitoring the server (Kubernetes API server), the reflector maintains a local cache of the resources. Upon any event occurring on the watched resource, implying a new operation on the Kubernetes resource, the reflector updates the cache (Delta FIFO queue, as illustrated in the diagram). Subsequently, the informer reads objects from this Delta FIFO queue, indexes them for future retrievals, and dispatches the object to the controller.

Indexer

The indexer saves objects into the thread-safe store by indexing them. This approach facilitates efficient querying of objects from the cache.

You can create custom indexers based on specific needs—for example, a custom indexer to retrieve all objects based on particular fields, such as annotations.

Manager

According to godocs:

Manager is required to create controllers and provide shared dependencies such as clients, caches, schemes, etc. Controllers must be started by calling Manager.Start.

The manager serves as a crucial component for controllers by managing their operations. Simply put, the manager oversees one or more controllers that watch the resources (e.g., Pods) of interest.

Each operator requires a manager to operate, as the manager controls the controllers, webhooks, metric servers, logs, leader elections, caches and other components.

Please refer to the manager interface for all dependencies managed by the manager interface.

Controller dependencies

As godocs mentioned, the manager provides shared dependencies such as clients, caches, schemes, etc. These dependencies are shared among the controllers that the manager manages. If you have registered two controllers with the manager, these controllers will share common resources.

Reconciliation, or the reconcile loop, involves the operators or controllers executing the business logic for the watched resources. For example, a deployment controller might create a specific number of pods as specified in the deployment specification.

The client package exposes functionalities to communicate with the Kubernetes API 3. Controllers registered with a specific manager utilise the same client to interact with the Kubernetes API. The primary operations of the client include reading and writing.

Reading operations mainly utilise the cache to access the Kubernetes API, rather than accessing it directly, to reduce the load on the Kubernetes API. In contrast, write operations directly communicate with the Kubernetes API. You can modify this behaviour so that read requests are directed to the Kubernetes API. However, this is generally only recommended if there is a compelling reason to do so.

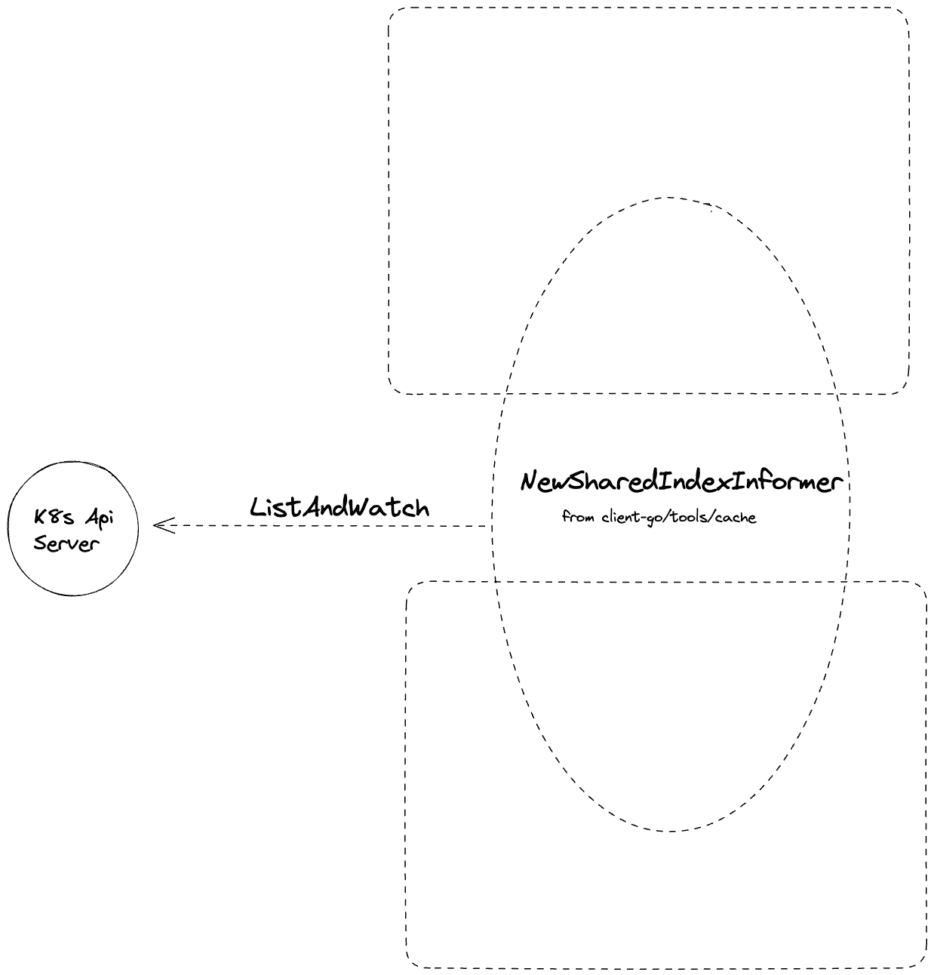

The cache is shared across controllers, ensuring optimal performance. Consider a scenario where there are n controllers observing multiple resources in a cluster. If a separate cache is maintained for each controller, n caches will attempt to synchronise with the API server, increasing the load on the API server. Instead, the controller-runtime utilises a shared cache called NewSharedIndexInformer for all controllers registered within a manager.

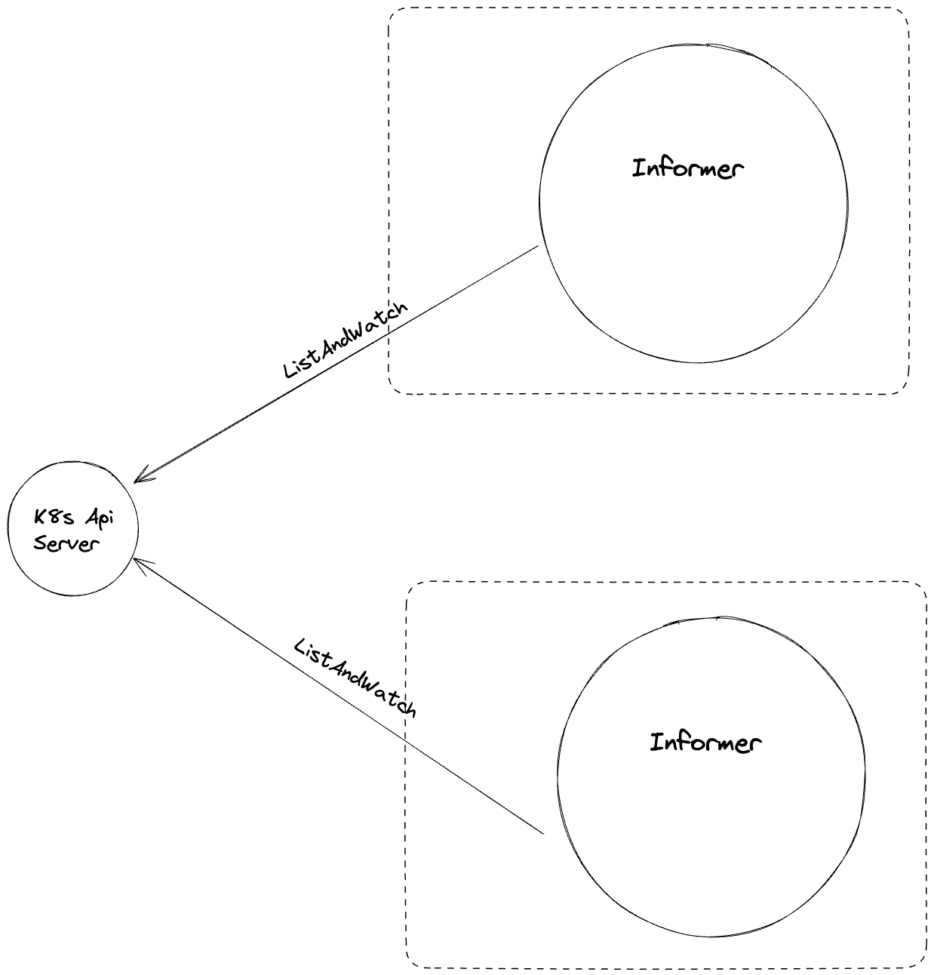

In the diagram above, two controllers maintain separate caches where both send ListAndWatch requests to the API server.

However, the controller-runtime utilises a shared cache, reducing the need for multiple ListAndWatch operations.

Reference: controller-runtime/pkg/cache/internal/informers.go.

Code

Operators need a manager to function, whether you use Kubebuilder, Operator SDK, or controller-runtime directly. The NewManager from controller-runtime facilitates the creation of a new manager.

var ( // Refer to godocs for details // .... // NewManager returns a new Manager for creating Controllers. NewManager = manager.New )

Under the hood, NewManager calls New from the manager package.

func New(config *rest.Config, options Options) (Manager, error)

For a simple setup, we can create a new manager as follows:

import (

"log"

ctrl "sigs.k8s.io/controller-runtime"

"sigs.k8s.io/controller-runtime/pkg/manager"

)

func main() {

mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), manager.Options{})

if err != nil {

log.Fatal(err)

}

}

Though this code piece is sufficient to create a manager, the crucial part involves configuring the manager using manager.Options{}.

Manager options

manager.Options{} configures various dependencies, such as webhooks, clients or leader elections under the hood.

Scheme

As mentioned in the godocs:

Scheme is the scheme used to resolve runtime.Objects to GroupVersionKinds / Resources.

If you are building operators, you will see the following code block in your operator:

package main

import (

"k8s.io/apimachinery/pkg/runtime"

runtimeschema "k8s.io/apimachinery/pkg/runtime/schema"

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

"sigs.k8s.io/controller-runtime/pkg/scheme"

)

var (

myscheme = runtime.NewScheme()

// SchemeBuilder is used to add go types to the GroupVersionKind scheme

SchemeBuilder = &scheme.Builder{

GroupVersion: runtimeschema.GroupVersion{

Group: "your.group",

Version: "v1alpha1",

},

}

)

func init() {

// Adds your GVK to the scheme that you provided, which is myscheme in our case.

utilruntime.Must(SchemeBuilder.AddToScheme(myscheme))

}

The scheme is responsible for registering the Go-type declaration of your Kubernetes object into a GVK. This is significant as RESTMapper then translates GVK to GVR, establishing a distinct HTTP path for your Kubernetes resource. Consequently, this empowers the Kubernetes client to know the relevant endpoint for your resource.

Cache

This is one of the most crucial pieces of operators and controllers, and you can see its effect directly. As mentioned in the Controller Dependencies section, controller-runtime initialises NewSharedIndexInformer for our controllers under the hood. To configure the cache, cache.Options{} needs to be utilised. There are a couple of possible configurations. Be careful while configuring your cache, as it impacts your operator’s performance and resource consumption.

I specifically want to emphasise SyncPeriod and DefaultNamespaces.

SyncPeriod triggers reconciliation again for every object in the cache once the duration passes. By default, this is configured as 10 hours, with some jitter across all controllers. Since running a reconciliation over all objects is expensive, adjust this configuration carefully.

DefaultNamespaces configures caching objects in specified namespaces. For instance, to watch objects in prod namespace:

manager.Options{

Cache: cache.Options{

DefaultNamespaces: map[string]cache.Config{"prod": cache.Config{}},

},

}

Controller

The Controller field, in manager.Options{} configures essential options for controllers registered to this manager. These options are set using controller.Options{}.

Notably, the MaxConcurrentReconciles attribute within this configuration governs the number of concurrent reconciles allowed. As detailed in the architecture of controllers section, controllers run workers to execute reconciliation tasks. These workers operate as goroutines. By default, a controller uses only one goroutine, which can be adjusted using the MaxConcurrentReconciles attribute.

After configuring the manager’s options, the NewManager function generates the controllerManager structure, which implements the Runnable interface.

During the creation of the controllerManager structure, controller-runtime initialises the cluster to handle all necessary operations to interact with your cluster, including managing clients and caches.

All the settings provided within manager.Options{} are transferred to cluster.New() to create the cluster. This process calls the private function newCache(restConfig *rest.Config, opts Options) newCacheFunc, initiating the NewInformer, which uses the type SharedIndexInformer as referenced in the controller dependencies section.

The next step involves registering controllers with the manager.

ctrl.NewControllerManagedBy(mgr). // 'mgr' refers to the controller-runtime Manager we've set up

For(&your.Object{}). // The 'For()' function takes the object your controller will reconcile

Complete(r) // 'Complete' builds the controller and starts watching.

In my future writings, I will dive into a detailed explanation of the controller registration process to avoid making this post excessively long.

Starting the manager

// mgr corresponds to manager.Manager{}

if err := mgr.Start(ctrl.SetupSignalHandler()); err != nil {

setupLog.Error(err, "problem running manager")

os.Exit(1)

}

Once the manager starts, all required runnables in the manager will start, in the order of:

- Internal HTTP servers; health probes, metrics and profiling, if enabled

- Webhooks

- Cache

- Controllers

- Leader election

For reference, check Start(context.Context) method of controllerManager struct.

Where next?

There’s so much you can achieve with Kubernetes. We’ve explored it from a whole bunch of angles on the Tyk blog, so why not expand your Kubernetes knowledge courtesy of our expert team?