The rise of cloud computing, the Internet of Things (IoT), 5G communications, and edge computing has driven the need to develop applications that can quickly and efficiently exchange data and functionality. This has led to the widespread use of application programming interfaces (APIs).

As APIs grow in popularity, Kubernetes-based infrastructures must increasingly be able to handle the entire API lifecycle.

This article explores the synergy between Kubernetes and API management.

Why is API Management Necessary?

Thanks to Kubernetes, businesses of all sizes have optimised their IT infrastructure to levels that seemed impossible to achieve a few years ago.

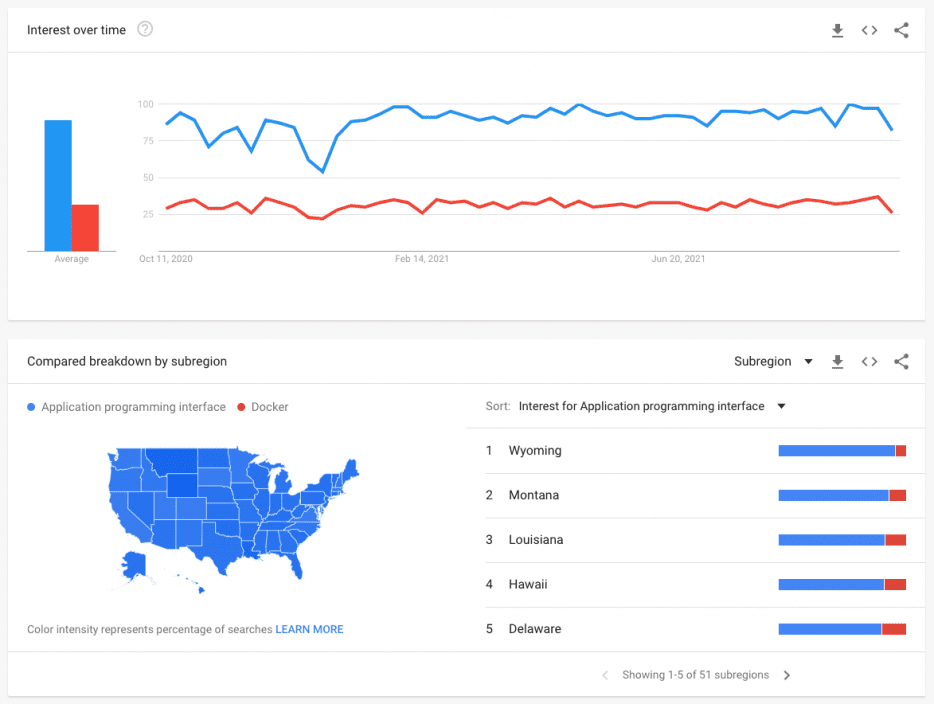

However, this means that applications on the Kubernetes cluster need to be designed to take full advantage of cloud computing benefits. This in turn has led to a reimagining of monolithic applications, first as microservices, and more recently, as a collection of APIs that both users and services can easily consume. A quick search on Google Trends confirms this growing interest in APIs, an interest that already surpasses that for Docker.

APIs trend

The widespread switch to APIs has led to a demand for better ways to manage them. The concept of API management was born.

What is API management?

According to IBM, “API management refers to the process of creating, publishing, and managing API connections within an enterprise and multi-cloud setting.” API management is closely tied to API gateways, which are designed to route the internal and external API calls of the cluster. The ability to manage API calls from a centralized control panel offers multiple benefits, such as more efficient enforcement of security and access control policies and collection of data and statistics from a central location.

What is a service mesh?

Think of a service mesh as a configurable layer of infrastructure that discovers and documents how microservices interact with each other, usually by attaching a proxy sidecar to each of them. Using a centralized control panel, the service mesh can help route requests from one service to another, thus facilitating service-to-service connectivity.

The key benefit of using a service mesh is that the added infrastructure can easily be used in cloud native environments such as Kubernetes, which gives it enormous flexibility and scalability. However, such scalability comes at a cost. As the number of APIs grows, so does the complexity and, therefore, the attack vectors against the infrastructure. To ensure better security and smooth operations, you need a more fully integrated way to manage the entire lifecycle of the APIs. This is where API management and API gateways come into play.

When using an API management tool, your DevOps team has a centralized control panel that facilitates the design, creation, publication, versioning, consumption, and monitoring of service mesh APIs. This both reduces complexity and improves security.

This synergy is why it has become so widespread to use a service mesh with API management platforms.

How API management helps Kubernetes

An API management solution builds upon the inherent benefits of Kubernetes, giving your team stronger communication and capabilities.

Scalability

One of the strengths of Kubernetes is its fantastic scalability. However, such scalability brings with it the challenge of keeping each application secure and under control. In a world where API-centric applications are becoming the norm, this can create a problem for administrators as Kubernetes does not have a built-in mechanism for API management. Integrating an API gateway makes it easy to scale each application/service as needed. This is possible using a centralized API manager portal that offers real-time visibility of how the APIs are being consumed.

Flexibility

Another key advantage of Kubernetes is its flexibility because of its open-source nature, which allows it to adapt to any use case. An API management platform expands Kubernetes’ flexibility by allowing it to easily interact with other APIs, including legacy systems, which greatly facilitates a smooth digital transformation.

Easier troubleshooting

As more layers of services and plug-ins are added to the Kubernetes deployment, you may find it increasingly difficult to detect the cause of issues in a timely manner. For example, errors in deploying a service can be caused by changes in its configuration. An API management platform can significantly decrease that complexity by offering regular monitoring and alerts on your applications. Not only will you get detailed information about each API call’s actions, but you can enable custom notifications to more easily detect any errors or possible attacks on the cluster.

Monitoring and data collection

One advantage of managing APIs through a gateway is that since all traffic must pass through a single point, it is easy to collect a variety of data. This streamlines the monitoring and analysis of API calls, which is invaluable for both the security and optimization of the Kubernetes cluster.

Optimized workflow

Today it is unthinkable to manage a Kubernetes cluster without CI/CD integration. Using an API gateway greatly facilitates the automation of different processes related to API management.

How API management meets challenges

Despite its benefits, the task of successfully deploying and configuring Kubernetes storage and networking is still extremely complex. This complexity is one of the biggest challenges to overcome since a bad configuration could cause service interruptions or, worse, it may put the cluster at risk. This challenge is especially true for teams attempting to modernize legacy applications, but using a centralized API management tool helps to reduce this complexity.

Declarative API management

One of the biggest advantages of managing APIs through a gateway is that you can manage Kubernetes APIs declaratively, informing the applications of the desired end configuration so that they can achieve it. This gives you a high degree of reliability because you can easily version all changes in the deployment. The gateway provides the Kubernetes deployment with reliable full-lifecycle API-management capabilities.

Enhanced security

Using an API gateway to handle all inbound and outbound traffic increases the security of the Kubernetes cluster. You can centrally control which users and services have access to each resource through Kubernetes’ native authorization and authentication mechanisms. Additionally, the API gateway serves as a barrier at which you can detect and eliminate threats before they access the cluster.

Multi-cloud API management

One of the biggest challenges Kubernetes currently faces is its deployment in a multi-cloud environment. An API management solution allows you to efficiently manage multi-cloud environments as it collects information from all the APIs in each cloud so you can map and manage them from a centralized control panel.

Powerful insights

Understanding in-depth how APIs are consumed is critical to improving Kubernetes cluster performance. An API gateway can collect invaluable data to monitor and analyze the behavior of API services and users. You can use this data to determine the future direction of your applications.

Conclusion

The widespread use of API-centric applications and services has increased the complexity of Kubernetes deployments, but the implementation of API management provides a clear, customizable solution. This makes API management platforms an ideal complement to your Kubernetes workflow.

If you are looking for assistance with your API management, Tyk is a leading cloud native API and service management platform that gives you the ability to instantly connect Kubernetes with other systems and services. You can use it to easily design, deploy, and scale APIs. From its convenient control panel, Tyk offers you full API lifecycle management at your fingertips.