Your API is growing, as is your customer base. Your product development efforts have been focused on a specific region of the world, resulting in growing your presence within a specific data center close to that region. Over time, your team determines that it is time to expand efforts into new regions. How does this impact your API traffic as you onboard customers in new regions? This article outlines some of the challenges and how your API gateway needs to be equipped to respond accordingly.

The Challenges of multiple data centers on APIs

An API operating within a single data center has easy access to everything it needs. The API gateway is guaranteed to have very few network hops between it and the API backend server. But, what happens when you need to expand your API beyond this single data center?

As you move from one data center to multiple DCs, challenges start to emerge. APIs may start to spread all over the world, or at the very least, across multiple regions within a specific country. This will increase network latency between the API gateway in one regions with your API backend in another region. The result is longer processing time for each API request. When the region with your API gateway goes offline, your ability to successfully process API requests drops to zero. You need your API gateway deployed to multiple data centers to address these concerns.

Perhaps your API needs to service regions that are unavailable by your current cloud provider. You now need to deploy your API to a new cloud provider to service those regions. Now you must synchronize your API gateway configuration across mutiple API providers while ensuring no misconfigurations exist.

These challenges are not new. In fact, they are common for APIs that are design to reach a global audience. Addressing these challenges, however, isn’t straightforward and requires careful planning to avoid encountering common issues:

- Misconfigured authorization rules – all changes to your authorization rules must be coordinated across multiple API gateway instances in several regions. One misstep and your API won’t enforce the right authorization rules

- Gateway route misconfiguration – Replicated configurations across API gateways in multiple data centers may result in configuration drift. Intermittent errors may occur as API requests are spread across these API gateways, resulting in hours and hours of troubleshooting to find the routing misconfiguration

- Slower API responses – You can share a single datastore with your API gateway configurations, but that results in slower API responses. In this approach, API gateways around the world must communicate back to a single data center with the stored configuration. Instead, configurations should be localized via a cache that is updated from a central location

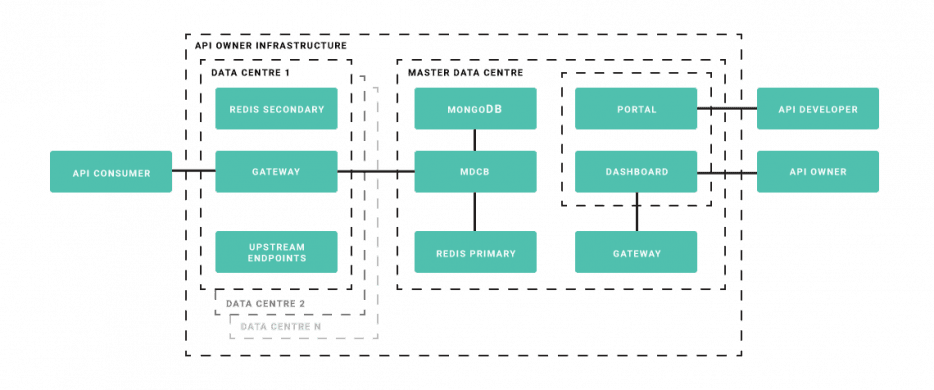

What is required is a new way to think about your API gateway, through the separate of the data and control planes that enable your API gateways to remain synchronized across data centers.

The need for an API Gateway control plane

Those familiar with network engineering are likely comfortable with the terms data plane and control plane. The data plane processes the network traffic, while the control plane is responsible for configuring the data plane. This separation of concerns ensures that network processing is able to continue with high priority, while configuration changes are applied when possible.

When deploying an API gateway to a single data center, a basic installation involves several instances of an API gateway along with a dashboard. In this scenario, it will appear as if the API gateway blends the concepts of the data and control plane because everything from reporting to configuratin is managed through a dashboard interface.

Well-architected API gateways recognize that there is a need to separate the data plane that processes incoming HTTP requests with the control plane that manages the routing and authorization rules used by the data plane. When this architecture is used, a single data center may expand to multiple data centers as needed. This flexibility in your API gateway is important to support adding new data centers as your business grows and new needs emerge as we identified earlier.

There are several advantages to having a clear control plane for synchronizing your API gateway configuration across data centers:

- Lower latency – by having a local copy of the routing and authorization rules, there is no need for looking up those rules from another data center

- Improved uptime – if your routing and authorization rules only exist in your original data center, any outage to that DC could result in a ripple effect to other data centers

- Consistency – without a control plane to synchronize to avoid mixed results, customers may experience inconsistencies with their API calls, e.g., the first request works in the primary data center, next request fails when trying to route a request to a second data center.