This blog post summarizes a session from LEAP 2.0: The API governance conference, featuring key takeaways and insights. Explore the full on-demand videos, slides, and more here.

A race is underway as businesses seek to harness the power of AI in a way that delivers success while minimizing risk. Machine to machine communication, the AI-driven creation of services and automated provisioning and access present myriad opportunities. However, the enterprises that will win the AI-enabled race are those that leverage these opportunities while also enforcing secure, safe and trusted methods of API access and consumption, with full control and visibility of their data assets and services. How? Through implementing AI governance in a way that underpins success across the supply chain.

It’s a topic that Tyk CEO Martin Buhr brought up at the LEAP 2.0 API Governance Conference. Read on for Martin’s insights into AI governance in action, including:

- Why getting AI governance right is so important

- What the building blocks of AI adoption are

- Why choice, confidence and flexibility are at the heart of a successful AI supply chain

- What Tyk AI Studio is, and how it relates to AI governance

Introducing the AI market

Thinking about how a modern smartphone is built provides a useful analogy for the AI market. Smartphones contain thousands of different parts, and how a vendor chooses to integrate those parts falls into one of two camps. In one camp are companies like Apple, which tries to control almost everything in the iPhone that is critical to its value, from hardware to software. In the other camp, we have Android, with its ecosystem of different manufacturers, chip makers, app developers and even apps available through multiple storefronts.

In an iPhone, very few features are replaceable. In an Android device, depending on your model, almost every software feature is customizable and replaceable, and there is a very large market for third party parts and components.

AI is headed down a similar path. We’re at an inflection point where the market will bifurcate into two similar camps: The all-encompassing bet on artificial general intelligence (AGI) versus an open market of tooling. More on that in a minute. First, we need to consider the role of AI governance.

Why is AI governance important?

When it comes to the enterprise, AI governance is incredibly important – for precisely the reason that the AI supply chain is important.

When we talk about AI governance, we often get stuck on the high-level ethics and existential risks like bias and alignment problems. These should certainly go into your decision-making process but today we’re focus on something far more tangible: The supply chain that makes AI work in the enterprise.

Ask yourself: Who controls AI in the enterprise? Should businesses be locked into a single vendor’s AI ecosystem, or should they have the flexibility to mix and match AI models, tools and platforms to suit their needs, much like any software problem?

The value of flexibility

Consider flexibility. This is something most IT managers are familiar with. A SaaS vendor doesn’t want you to buy just one product; it wants you to buy its whole ecosystem.

Some AI providers want the same – to be a one-stop-shop for AI. They build the engine and a platform of cohorts and features that add value around it, hoping to capture the whole market.

But, increasingly in this economy, businesses need modularity. If you’re locked into one ecosystem, how do you adapt when a better model comes along, or a cheaper provider appears? More importantly, what about when your business tactics and strategy shift over time?

This is a critical consideration when evaluating AI vendor adoption, composability and flexibility.

Who owns your AI outputs?

Another key consideration is that AI models are black boxes. So, how do you ensure sensitive data isn’t leaked or misused by a vendor? You can only control so much. Once that data leaves your network, it’s fair game; a SaaS vendor is ultimately just someone else’s PC.

More concerningly, most terms of service are flexible and can be changed at any time. There’s also no enforcement or verification, except for standards bodies, in terms of providing trust.

Of course, in an enterprise agreement, lawyers spend inordinate amounts of time building out safeguards, but, ultimately, that’s just a piece of paper. It’s not the same confidence you get when you do things yourself.

And now companies are struggling with shadow AI. Employees are using unauthorized AI tools and exposing these organizations to data leaks. Suddenly, some document that was optimized with an AI tool becomes fodder for a recommendation engine on someone else’s integrated development environment (IDE).

The need for efficiency

Organizations always need to consider efficiency when it comes to the tooling. In this respect, AI is more like a virus than a consolidated solution at the moment. Teams are deploying AI in silos: Finance uses one model, customer support uses another and IT yet another, with no central oversight. That’s shadow IT at work, with AI. It’s inefficient and it’s costly.

Large organizations deal with shadow IT as a problem that is solved through consolidation. But, at much larger levels, it’s counterproductive. This is why we’re seeing solutions such as the bring your own device movement and federated API management.

The idea is really simple. Use what you want, when you want. Just tell the business about it first and maybe try using what the organization already has. The tricky part is that this can only work if you have oversight. If you don’t, these shadow use cases become uncontrollable.

The building blocks of AI adoption

At this point, let’s dive into the building blocks of AI adoption:

- Vendors: This is the AI model, so OpenAI, Entropic, Meta and so on.

- Interfaces: These are the applications where AI is used: Chatbots, developer tool IDEs, enterprise dashboards, assistants, code completion, sentence completion and the like.

- Data: This is the lifeblood that makes AI useful – your internal documents, customer interactions, logs and anything else you can feed in to make use of the power of the AI.

- Tooling: This is the governance layer that manages it all through monitoring, security and compliance.

All these components come into play when you’re trying to add value or improve the performance of a process. They’re the building blocks of AI tooling, which together form a pipeline:

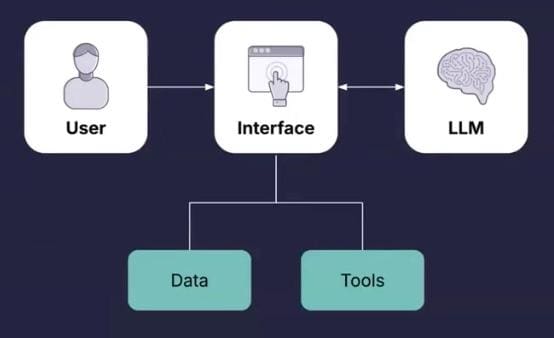

Let’s look at the interface component in a little more depth…

Introducing the AI supply chain

The interface in the example above could be a custom app, some kind of portal or a chatbot in your application, pulling data from a database to enhance and ground its responses from the model. And if it’s an integration or an action-oriented process, it also serves up the tooling – the integrations – that the LLM can use to perform actions if it decides they’re warranted.

While this is pretty simple at the highest level, integrations open up a whole host of complexity, including (but not limited to) a hard integration with the two dependencies shown above (data and tools). This is where we need to introduce the concept of the AI supply chain.

In every other industry – manufacturing, software, shipping and so on – supply chains enable specialization and flexibility. AI should work the same way.

The pipeline we’ve shown above is, under the hood, essentially just a bunch of API calls. AI is ultimately given value and purpose by the agency and knowledge it is given by its user, and the underlying transport for that knowledge and agency is the humble API call.

APIs have come a long way since the days of SOAP and XML. We have the OpenAPI spec for transportable API definitions and the AsyncAPI spec attempting to do the same thing for real-time APIs. While we still don’t have a single unifying standard, we do have APIs that are still usable by the humble human with some rudimentary tooling and without the need for massive libraries or SDKs to build integrations.

We also have industry-wide standardization, for example with standards like open banking. Suddenly, financial interoperability has created this massive flexibility in the fintech industry and ballooned the amount of cool things you can do with your financial apps, all because of standardization.

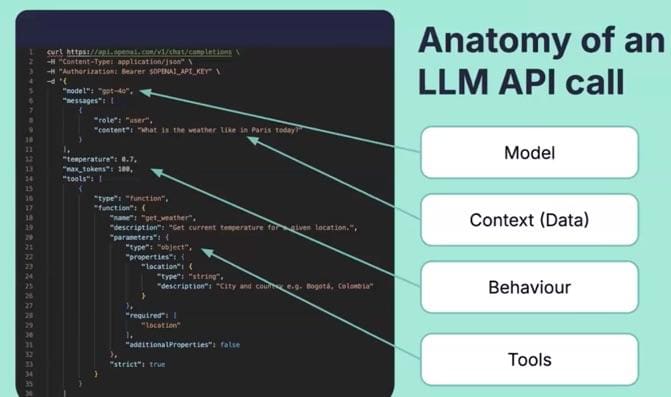

Like banking, AI APIs are a bit different. They are a class of APIs that are inherently similar. Most LLMs take the same parameters to tune their input. The more advanced ones have space for different input types – so, if they’re multimodal, they take images, audio, video, etc. Others have this fantastic tool use capability, where they can make a function call on your machine or on your server and actually do something with the world.

Let’s take a quick look at the anatomy of an LLM API call.

This is an example of an OpenAI API call. If you look at an Anthropic one, as another example, you’ll see that it’s really similar. They have all the same components, but they’re still just different enough to need a custom integration with that LLM, even though the output generally is the same.

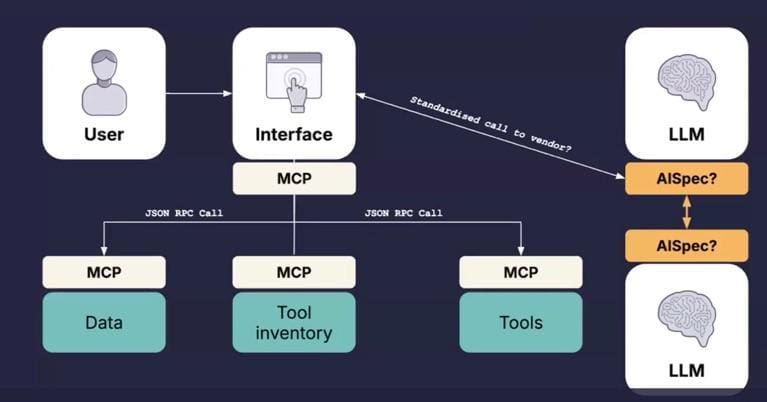

Anthropic is an example that really promotes the supply chain-ization of the AI ecosystem. Its model context protocol (MCP) is particularly exciting, as it provides a clean approach to adding tooling and extensions to an AI model. Moreover, it provides those extensions to the tooling that provides the interface to that model. So, it’s a way to integrate with users on machines and accessible services.

As we looked at above, the interface is a key component in how we interact with the AI, and the MCP makes it easy for the interface to interact with the world around it. While there’s some nuance to this, ultimately it decouples a chunk of the context work for LLM calls. The MCP defines and standardizes the interfaces between the LLM interface and the available tools and data sources.

So, if your tool speaks MCP, then it can be registered with the interface and made available to the LLM. And if you’re using another interface, maybe an IDE or integrated assistant, and if that speaks MCP, then you can register that service with that interface, and it instantly becomes available to that element. This means you can swap interfaces and take your tooling with you. You’ve got the transportability of tooling.

This is an excellent first step towards making the data supply side of context management – this upstream part of the supply chain – more modular and much more flexible for tool creators to get involved and supply value into the wider AI ecosystem. It’s the kind of hyper specialization that we see in modern supply chains.

Of course it’s still only a first step. What it would be good to see from LLM vendors is a standardization of their downstream interfaces, so the actual API calls to the service, and in turn, potentially even between LLMs.

Let’s look at how a modular AI supply chain works for an enterprise.

Exploring enterprise AI supply chains

To get real value from your AI journey, enterprises need solid governance. They also need choice (in the form of vendor-agnostic AI), the confidence that comes from built-in security and governance, and sufficient flexibility that they can fit AI around their workflows.

Choice

Let’s consider the fact that enterprises want the ability to swap AI providers without major disruption. This is super tricky, not just from an operational perspective, but also from a behavioral perspective, since elements behave very differently depending on their type. Transformer models, for example, are very different to those that are trained to pre-think in their responses.

There’s also the issue of bias. Alignment and bias are serious downstream problems, and there needs to be a better, more standardized way to deal with them. For example, to manage bias in OpenAI’s GPT4 or with Llama (Meta’s LLM), you can use something called a fine tune, which you have to train yourself and which layers on top of the model. You create, train and test the fine tune yourself, but then you can’t move it from one vendor to another; you have to do the process again with each new LLM.

Something like that would be an ideal candidate for standardization and plugging into the AI supply chain. It would be really hard to do, as it would be such a highly specialized component, but there’s some serious value to be unlocked there.

When it comes to your AI governance model, you need a strong opinion on how you want to tackle things. Moreover, you need processes and tooling to make swapping elements easier. That’s why AI supply chains, where different vendors provide specialized components, are critical.

Confidence

Businesses must ensure AI is used with the right data, by the right people, using the right tools. That doesn’t mean just handing everything to one vendor.

Data is where you can really make your AI adopt work for you. You can use your whole mass of customer, product, market and operational data. If you’re a really data-driven enterprise, you may even have a decent data strategy and have data warehouses with structured data ready for processing. If you can supply this data to AIs, you can unlock all kinds of productivity bonuses for all your departments.

The risk, of course, is the data leakage we mentioned earlier. However, a good governance framework, standards and tooling should provide confidence that the data being sent to LLM vendors won’t end up in a training set or in a log somewhere outside your locus of control.

Flexibility

Enterprises need the flexibility to build workflows that don’t require the workforce to completely change how they work. One of the wonders of AI is how easy it is to interact with, since it’s just like speaking to a person. However, you don’t want everything to live in a chat window; chat is a terrible interface for procedural work. Ultimately, you want the flexibility to integrate tooling anywhere you need it – which was the promise of APIs. And it shouldn’t require a chatbot to work with it!

So, in your governance decisions, you need to find out how you can embed AI seamlessly into your existing processes and evaluate that. For example, through the Tyk AI Studio. Using Tyk AI Studio, enterprises own their own supply chain, enjoying a choice of vendors, confidence in governance and security, and the flexibility to integrate AI into the business, rather than having to mold the business around the AI.

You can use Tyk AI Studio as a governance gateway that lets you control which AI models can be accessed and by whom. All while enjoying a security-first approach to AI adoption, routing AI requests and enforcing policies with built-in compliance, budgeting and observability. It all works hand-in-hand with your API management strategy as you manage more and more AI interactions in your business.

Want to know more? Put your questions to our friendly Tyk experts and we’ll be happy to help!