Whether you’re an engineer, product leader or policymaker, having a practical blueprint for integrating AI in a way that’s governed, efficient, and future-ready has never been more essential. Read on to discover how to build a well-governed, sustainable AI supply chain that doesn’t fall apart under pressure and that works at scale without sacrificing security or control. We’ll cover:

- How to structure an AI supply chain for flexibility, security and scale

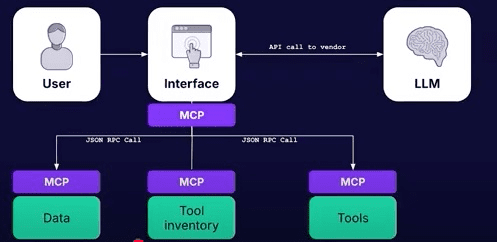

- The role of large language model (LLM) APIs and Model Context Protocol (MCP) as key players in AI interoperability

- The benefits of structured toolchains vs. AI operators – because one size doesn’t fit all

- How APIs serve as the transport layer for knowledge and decision-making in AI systems

Prefer to watch rather than read? Then check out our on-demand webinar on AI, APIs and the path to AI readiness.

Building solid foundations

AI is changing the game, but without a solid foundation, it can quickly become a house of cards. Effective AI governance isn’t just about ticking boxes; it’s about building a structured AI supply chain that ensures everything stays reliable, accountable and efficient. This requires four key components:

- Vendors: AI models such as OpenAI, Anthropic, Meta and so on

- Interfaces: Applications that use AI (chatbots, dev tools, enterprise dashboards and the like)

- Data: The internal docs, customer interactions, logs and so on, which make your AI useful

- Tooling: The monitoring, security and compliance that make up your governance layer

All four of these are pivotal in making your AI systems work smoothly – along with your APIs, of course. All of these need to be aligned if you want your AI systems to scale reliably and securely.

Structuring the AI supply chain for enterprises

Tyk CEO Martin Buhr recently shared his thoughts on structuring the AI supply chain. He drew on the example of smartphones, which are divided into two paths: deeply integrated products (such as Apple) and open and distributed ecosystems (such as Android). Just as developers have to choose between Apple’s controlled environment and Android’s open one, enterprise teams today face a similar choice with their AI infrastructure. However, unlike consumer devices, enterprise AI systems must balance innovation, scalability and agility with governance, security and compliance.

We’re currently at a bit of an inflection point, with the AI market dividing into two camps: closed ecosystems controlling the entire stack and more modular components that you can mix and match. It means you have to choose between building on someone else’s terms or creating an architecture that can evolve as both AI technology and your teams mature. And the architectural decisions that you make today will determine your AI technical debt for years to come. So, no pressure!

APIs are the connective tissue of this API supply chain. They enable the modularity that makes enterprise AI both powerful and governable, making robust API management fundamental to AI success and to a flexible supply chain approach that helps future-proof your investments.

Critical considerations for AI readiness

While different teams are taking the lead on AI in different businesses, from a tooling perspective right now it is engineering and development teams that are best equipped to govern the AI supply chain effectively.

These teams have three critical considerations when it comes to AI readiness:

- Flexibility

- Compliance

- Efficiency

Maintaining flexibility requires you to consider the issue of vendor lock-in. Switching vendor models has a high price if those models are on exclusive runtimes.

We’re all familiar with the SaaS playbook – an engine surrounded by features that are hard to leave. Alternatively, a supply chain approach means you can focus on creating value, choosing the right components for each use case. It gives you the flexibility to shift your strategy, embrace better models as they emerge and so on, making composability a sound strategic choice for many enterprises.

In terms of security and data compliance risks, you need to think about who owns your AI outputs. Models are black boxes, putting data beyond your control once it leaves your network. Then there’s the problem of shadow AI, with employees using unauthorized tools that can expose your enterprise to data leaks. Keeping sensitive information under your control while still leveraging emerging and best-in-class models isn’t simple.

Scaling AI the right way also means considering operational efficiencies. Many enterprises currently have AI proof-of-concepts (POCs) scattered across departments, with no central oversight. Nor are these POCs just limited to technical teams – people teams, marketing teams, operational teams, development teams, product teams, design teams… Everyone is using AI.

Tackling this is a bit like embracing federated API management: You can empower teams to use what they need, but you need to know about it centrally, with visibility and standards embedded into your AI supply chain.

The foundation of enterprise AI adoption

As we mentioned above, enterprise AI adoption is built on vendors, interfaces, data and tooling. APIs connect all of these components, standardizing the interactions that enable the composability and the modularity between these elements.

When you’re trying to add value or improve a process or performance, all of these components come into play. Consider a real-world example: A financial firm deploying an AI chatbot for customer support. The firm would need a model (like GPT4), an internal knowledge base (i.e. their internal documentation and previous customer support tickets), and compliance guardrails to ensure the accuracy of answers. These components form a pipeline, working together to create value – which is what the AI supply chain is.

Supply chains enable specialization and flexibility, as we see in the manufacturing, software, shipping and myriad other industries. That’s why the AI supply chain is so important to your future success.

AI supply chain models

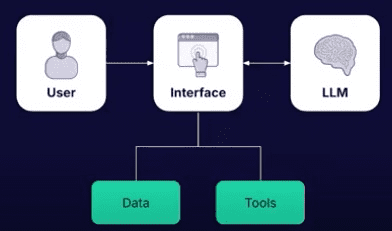

The AI supply chain has two main emerging models. The first is a point-to-point model based on user-to-LLM interaction, as shown here:

In this model, the user interacts with the LLM via an interface underpinned by data and tooling. The user gives tasks and the LLM performs a job in response.

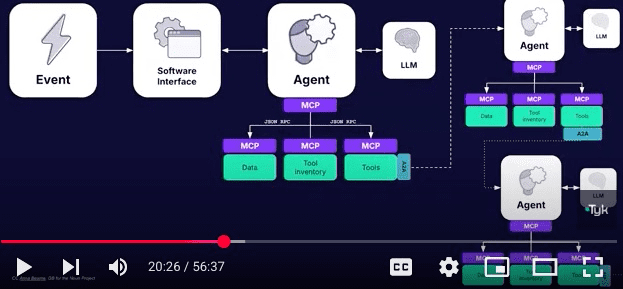

The other model – agentic AI – is more complex. We can see this point-to-network model, where users interact with one or more agents, outlined here:

In this model, work is not triggered by the user but by an event. For example, submitting a pull request could trigger a code review, running the agent. With agents able to talk to one another, the model can become quite complex, making it tough to track where data is coming from. This is one area where Tyk AI Studio shines; it deeply integrates to analyze API calls and extract metadata, providing you with a full analytics audit.

At the heart of all of these interactions are API calls. Whether you’re calling microservices or MCP tools, it all comes back to APIs.

As agentic AI grows, so will API usage. This means standards such as OpenAI will not just remain relevant but be even more important as AI adoption increases. Having a clear structure for your APIs, and understanding what you’re exposing and how, will be essential to your AI success.

MCP brings notable benefits to the AI supply chain around communication and standardization, supporting interconnection between different vendors. It’s how agents talk to the world. Complementing this is Google’s Agent-to-Agent (A2A) Protocol, which enables communication between different agents.

Within this complex structure, it’s essential to track and understand how and where your data is used. All while providing your team with the latest AI tools, supported by a governance framework that supports innovation and progress.

How a composable enterprise AI supply chain works

Organizations need the ability to swap AI models or providers without disruption. However, at present, different API types, different clients, behavioral differences between models and differences in alignment and bias tuning all make this a challenge. This means your governance model needs processes and tooling that make swapping models easier. That’s why AI supply chains, where different vendors provide different components, are critical.

Enterprises also need to ensure AI is used with the right data, by the right people using the right tools. Your proprietary data (your customer, operational, product, market and other data) is where the real value of AI lies. This means you need a good governance framework with standards and tooling that give you confidence that data you send to LLM vendors isn’t ending up in training sets or logs outside of your organization and your control.

The final element you need is the flexibility to build workflows that don’t force your teams to completely change how they work. This is important not just in AI but in all areas of your work – it’s one reason we’ve built Tyk’s products in a way that fits around you, rather than forcing you to do things a particular way.

As we see standaridzation of interactions with and in relation to LLMs, we’re reaching a critical point where enterprises can unlock value through specialized providers in the AI supply chain. Therefore, in your AI readiness decisions, you need to look at how you can embed AI seamlessly into your existing tools and processes.

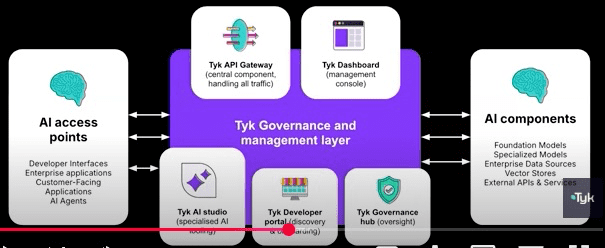

Here at Tyk, we’re ready to help. We have a comprehensive suite of capabilities for integrating, managing and governing AI alongside traditional APIs.

The newest of these features are Tyk AI Studio – our specialized AI tooling component for governance, budget controls, model connections, safety thresholds, privacy filtering and more – and the Tyk governance hub, for overseeing and auditing all your APIs and (soon) AI interactions. In addition, Tyk API Gateway handles everything from MCP server integration to security enforcement to traffic routing and protocol translation. Tyk Dashboard covers API and AI configuration, policy management and analytics, while the developer portal supports discovery and developer onboarding.

You can use Tyk AI Studio for a range of use cases, including:

- Internal AI experimentation with controlled access and budgets

- Seamless transition from POCs to production

- Secure exposure of AI capabilities to external developers

- Making existing APIs easily consumable by AI clients

- Exposing official company AI agents securely

All while maintaining governance, security and the great developer experience you get with Tyk! Why not speak to our team to find out more?