I recently completed a three-day API and microservices workshop for a software-as-a-service company. The company has been around for over 15 years and have never been given the opportunity to correct short-term fixes. As you may have guessed already, they carry significant technical debt.

The team decided that they want to move to microservices. I was asked to get everyone on the same page regarding fundamentals and good practices around designing microservices, including a focus on good API design. We worked through the material and had many great side discussions. By the end, they were both excited about what the future holds and a bit overwhelmed about all the details that go into a microservice journey: service boundaries, containerisation, distributed transactions using sagas, service meshes, and distributed data across many services – all while maintaining the existing legacy system.

Throughout our conversation, one theme became clear: they want to think smaller. It wasn’t that microservices was the perfect answer for them – only time will tell. The team wants smaller building blocks that they assemble to better serve their customers’ needs. Let’s look at what thinking smaller means as part of a microservice journey.

Modular Codebases Help Us Organise Better

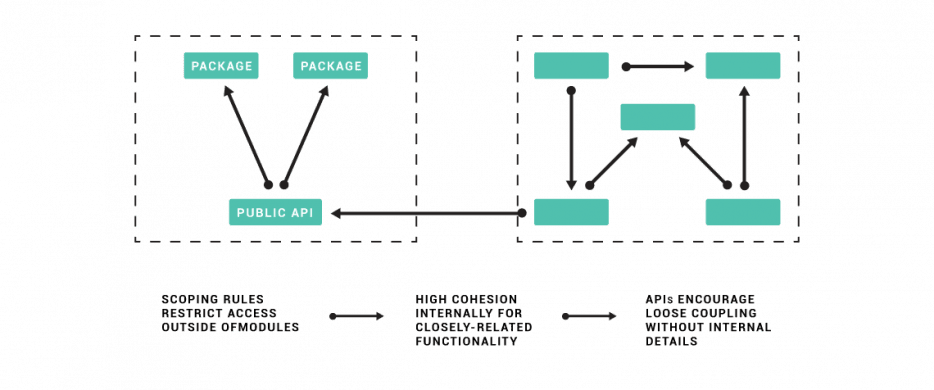

Modules are fundamental building blocks of software. They expose APIs within our codebase that hide internal details, resulting is a more loosely-coupled architecture. We explored this concept some time ago in the article titled “Your Data Model Is Not an API”. As a quick reminder, modules are considered highly cohesive if related code is grouped together, and loosely coupled if we hide implementation details from the rest of the software:

The proliferation of web frameworks over the last decade has favoured the quick assembly of solutions over modular software. As a result, we are seeing large codebases with a single, global module. We have allowed our codebase to be highly coupled.

Once the complexity of our software exceeds our ability to retain high cohesion and low coupling, we immediately declare monoliths as a poor choice and move to microservices. “Microservices will fix everything” we say. Except they won’t. Not unless we grasp these core concepts. Microservices are forcing us to visit, or revisit, the concepts of modular software once again.

Key takeaway: Thinking smaller requires that we think in terms of low coupling and high cohesion once more.

Boundaries Matter

Once we start to think of our solution as modular components, we start to realise that we can reduce the codebase of a particular area of our software. Those choosing to go toward a microservice architecture have chosen to place a network boundary between these components.

When we choose to place clear boundaries around areas of our solutions, we lower the cognitive load required of a developer to understand a particular portion of the overall solution. We also limit the impact of a change to a particular area of the codebase, rather than having it ripple throughout the entire codebase.

Domain-driven design (DDD) offers some concepts that are powerful in creating clear boundaries around our solutions. DDD recognises that defining software boundaries requires a understanding of how the business works, not just how we compose our software. Subdomains allow us to group areas based on organisational structure. Bounded contexts allow us to draw lines around our domain models.

While we don’t need microservices to achieve those boundaries, it is becoming a more common approach to define and enforce boundaries. In short, we need a better way to organise solutions so that we are able to reason about the codebase and therefore reduce the chance of ripple effects when we make a change to the code.

Key takeaway: Smaller codebases with clear bounded contexts mean lower cognitive load on developers.

Reduced Team Coordination Increases Velocity

Every time developers need to attend a meeting, software velocity is reduced. The larger the solution, often the more coordination meetings are required. I’ve been there.

So, why do we call meetings where developers are disrupted? Because we are concerned about introducing breaking changes to our solution. The more fragile our codebase, the more likely this is the case and the more often we must meet to surface the intended change and try to identify areas of concern before we move forward.

To achieve stability alongside speed, we must seek to reduce the risk of change. Otherwise, we are doomed to sit in more meetings as the complexity of our software increases. This is expressed clearly in Chapter 1 of “Microservice Architecture” (Amundsen, McLarty, Mitra, Nadareishvili):

“Organisations that succeed with microservice architecture are able to maintain their system stability while increasing their change velocity.”

Key takeaway: Team coordination reduces software velocity. Seek to create independence through bounded contexts that offer clear APIs that isolate the impact of change.

Conclusion: Think smaller

Microservices isn’t about frameworks. It is about thinking smaller. Perhaps microservices is the right choice for you. Perhaps a well-organised, modular monolith is a better choice for you. Or perhaps mini-monoliths that tackle a specific subdomain and have clear APIs and published messages would allow you to lower the cognitive load of your solution while avoiding the challenges of distributed computing common with a microservice-based architecture.

Whatever you choose, it is clear that software is becoming more complex and therefore thinking smaller is important as we build tomorrow’s software today.